By incorporating the semantic which means of text into the search course of, we can achieve extra correct and environment friendly doc retrieval. However, choosing the right embedding model and understanding the underlying technologies are essential for profitable implementation. As LLMs continue to evolve, the way ahead for semantic search holds even larger potential for advancing the sphere of knowledge retrieval and natural language understanding. On the other hand, comparing the primary document with one thing like “The quick brown fox jumped over the whatever” would lead to a low semantic similarity rating. By representing text semantics on this multi-dimensional space, semantic search algorithms can efficiently and accurately establish relevant paperwork.

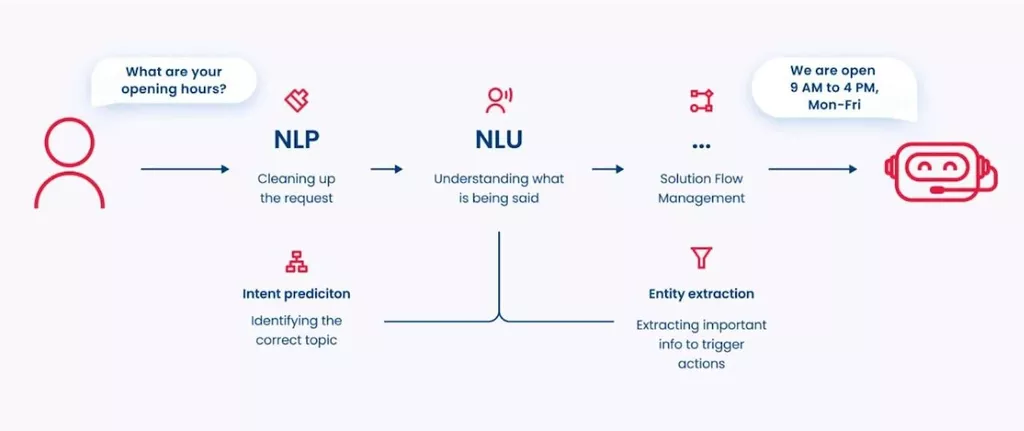

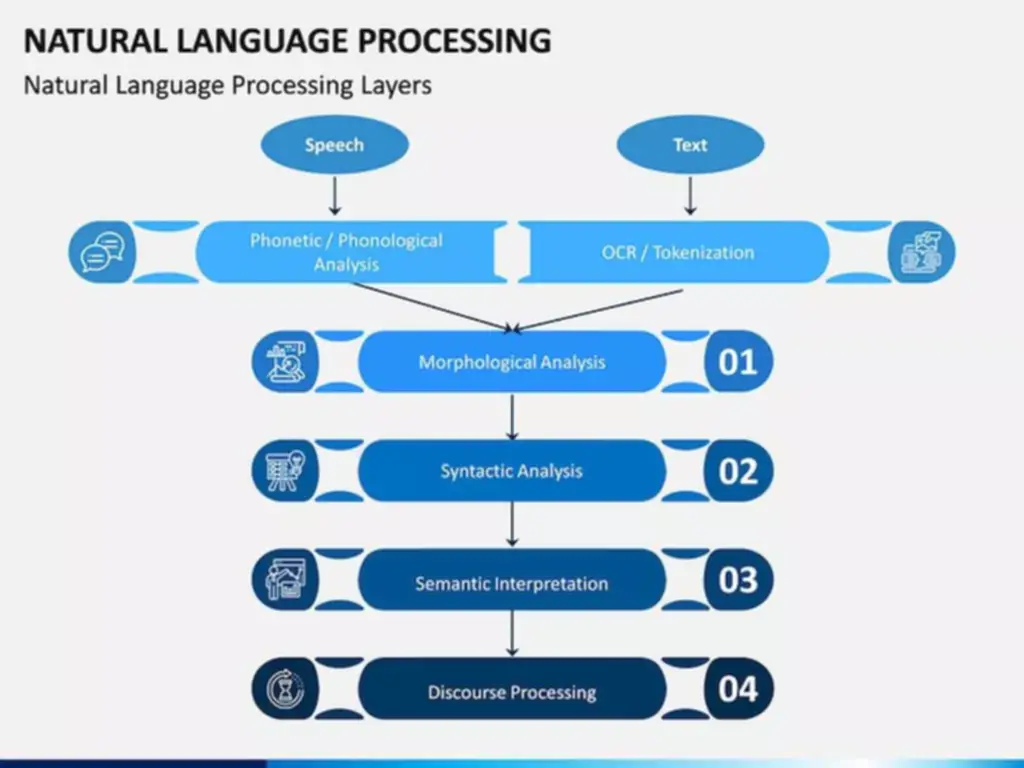

Semantic search goes past traditional keyword-based search methods by considering the that means and intent behind a user’s query. It leverages text embeddings, which are multi-dimensional numerical representations of “meaning” generated by language models. These embeddings capture the semantic relationships between words and phrases, allowing for more nuanced and context-aware document retrieval.

Words like “doctor,” “patient,” and “diagnosis” would be close together, whereas “car” and “engine” would reside in a special area. The researchers also tried intervening in the model’s inner layers utilizing English textual content when it was processing other languages. They found that they might predictably change the model outputs, although those outputs had been in other languages. To take a look at this hypothesis, the researchers passed a pair of sentences with the identical that means however written in two completely different languages via the mannequin.

In that case, the user may merely enter “table furniture” and retrieve relevant results — including outcomes that don’t explicitly include the time period “furniture”. The results may not even contain the word “table” however, say, “workbench” or “desk” as an alternative. Implementing Giant Language Models (LLMs) for semantic search presents several challenges that have to be addressed to optimize efficiency and accuracy. A powerful method is to decouple the paperwork https://www.globalcloudteam.com/ listed for retrieval from these handed to the LLM for technology. This separation permits for extra efficient processing and retrieval of relevant info.

Step 4: Enable Pgvector For Vector Operations

Given a user query’s semantic representation, this algorithm identifies probably the most relevant paperwork based mostly on their proximity in the embedding space. By calculating the similarity scores between query embeddings and doc embeddings, semantic search techniques can rank and current probably the most appropriate documents to the person. This blog submit is about constructing a getting-started example for semantic search utilizing vector databases and large language fashions (LLMs), an instance of retrieval augmented generation (RAG) architecture.

Leveraging Large Language Models For Supply Chain Optimization

For occasion, a mannequin that has English as its dominant language would depend on English as a central medium to process inputs in Japanese or purpose about arithmetic, pc code, and so on. In conclusion, LLM-powered semantic search and subject mapping marks a major improvement over traditional strategies of knowledge retrieval and textual content mining, providing extra contextually relevant results. As the technology continues to evolve, it holds the potential to revolutionize how we access and interpret huge amounts of textual knowledge. The integration of LLMs like BERT or GPT-n into search algorithms demonstrates a significant leap from conventional lexical search strategies.

However, there are different options obtainable, including open-source options, albeit with varying degrees of energy and ease of use. Even without coaching or finetuning an LLM, operating inference on a big database of documents introduces considerations relating to computational sources. Furthermore, the shortage of explainability inherent in these fashions poses a hurdle in understanding why certain results are retrieved, and how to highlight related keywords in search results. Alternatively, they could use a search engine that would permit for semantic search.

Large language fashions (LLMs) are driving the speedy progress of semantic search functions. Semantic search understands each consumer intent and content context, rather than simply matching keywords. LLMs enhance this capability by way of their advanced language processing skills. These AI fashions can process multiple content codecs, together with text, images, audio, and video.

One key technology that has been launched in 2018 is the large language mannequin (LLM) called BERT (Bidirectional Encoder Representations from Transformers) Devlin & Change 2018, Nayak 2019. The integration of Large Language Models into supply chain optimization processes presents vital advantages, including improved efficiency, reduced costs, and enhanced decision-making capabilities. By leveraging these advanced applied sciences, organizations can navigate the complexities of contemporary provide chains extra successfully. However for content-rich websites like information media sites or on-line buying platforms, the keyword search functionality could be limiting. Incorporating large language fashions (LLMs) into your search can considerably enhance the person expertise by permitting them to ask questions and discover information in a much simpler means.

In our implementation, we demonstrated how embeddings and indexing may be carried out using FAISS as the vector library, or in different with OpenSearch as the vector database. We then moved onto the semantic question process using similarity search and vector DB indexes. To finalize the results, we utilized an LLM to transform the related doc snippets into a coherent textual content reply. Each people and organizations that work with arXivLabs have embraced and accepted our values of openness, neighborhood Cloud deployment, excellence, and consumer data privacy. ArXiv is dedicated to these values and solely works with companions that adhere to them. Right Here, LLMs transcend retrieving present documents and may doubtlessly generate new content that addresses the user’s question.

This creates a motion pictures desk with vector_description column storing 256-dimensional vectors. The dimension value (256) must match the embedding size specified when producing embeddings in Step 2. After working the .NET utility, vector embeddings are generated for every movie. Build dependable and accurate AI agents in code, able to operating and persisting month-lasting processes within the background.

Semantic Search In The Context Of Llms

The customers obtain complete search results throughout totally different media varieties semantic retrieval that match their intent. For instance, a pure language query about “how to make sushi” might return textual content recipes, tutorial videos and step-by-step images. In this weblog submit, we’ve demonstrated the means to build a newbie’s semantic search system utilizing vector databases and enormous language fashions (LLMs). Semantic search makes use of these embeddings to characterize each user queries and paperwork inside your search database. These indexes retailer the embeddings of documents, permitting for environment friendly and quick retrieval in the course of the search process.

In today’s information age , navigating the ever expanding digital ocean may be overwhelming. Conventional keyword-based search engines like google usually fall brief, delivering outcomes primarily based on literal matches quite than understanding the true intent behind a question. These questions highlight the necessity for cautious evaluation and consideration when selecting an embedding mannequin for semantic search functions. It goes beyond literal matches and focuses on understanding the context and intent behind a user’s query. The mannequin assigns related representations to inputs with related meanings, regardless of their data sort, including photographs, audio, pc code, and arithmetic problems. Even though an image and its textual content caption are distinct data sorts, because they share the same meaning, the LLM would assign them comparable representations.

This methodology permits LLMs to access a wealth of external data, improving the relevance and accuracy of generated responses. Incorporating massive language fashions into semantic search systems can greatly enhance their effectiveness, offering users with extra related and contextually applicable results. As technology continues to evolve, the mixing of LLMs will likely turn into a regular practice within the area of data retrieval. It’s a revolutionary method that transcends simple keyword matching and delves into the deeper which means of a user’s search. Right Here, we’ll explore how semantic search leverages the facility of huge language models to deliver a extra relevant and insightful search experience. Semantic search, empowered by LLMs and text embeddings, has revolutionized the way we retrieve data.

- The integration of LLMs like BERT or GPT-n into search algorithms demonstrates a major leap from traditional lexical search strategies.

- To put it merely, semantic search entails representing each consumer queries and documents in an embedding space.

- This showcases the potential of advanced semantic processing strategies in enhancing the capabilities of LLMs in semantic search purposes.

- The model doesn’t have to duplicate that data throughout languages,” Wu says.

They have achieved very spectacular performance, however we’ve little or no knowledge about their inside working mechanisms. MIT researchers probed the inner workings of LLMs to higher perceive how they process such assorted data, and found evidence that they share some similarities with the human brain. Maybe probably the most surprising implication of this statistic is that the early semantic fastText mannequin doesn’t outperform the lexical model, tf — idf. That being mentioned, the more recently trained LLMs clearly outperform each fastText and tf — idf.